By 2020, Artificial Intelligence (AI) and related technologies will be found in a wide range of businesses, in a large number of software packages, and in our daily lives. AI will be one of the top five investment priorities for at least 30% of Chief Information Officers by 2020. This new gold rush has attracted global software manufacturers. Unfortunately, while the prospect of additional revenue has driven software company owners to invest in AI technology, the reality is that most companies lack the specialised personnel required to embrace AI.

An implicit point of warning in many industry surveys on AI, Machine Learning and its impact on current industries is that software developers should first focus on understanding the needs of the customer and potential benefits from AI, before chasing the hype, which has been called as “AI Washing”.

The current trust deficit in “capabilities of tech-enabled solutions” will diminish in the next ten years.

The impact of AI in the coming decade

Over the next decade, we’ll see a dramatic transition from scepticism and partial suspicion to complete reliance on AI and other advanced technology. The majority of AI-powered applications are aimed at consumers, which is another compelling reason for mainstream users to overcome their distrust over time. The Citizen Data Science community will pave the path for a new technological order by gaining more exposure and access to technological solutions for their daily activities.

While technologies like the cloud computing allow business processes to be more agile, AI and Machine Learning can influence business outcomes. People have sought to construct a machine that behaves like a person in the post-industrial period. The thinking machine is AI’s greatest gift to humanity; the arrival of this self-propelled machine has completely altered the business landscape. Self-driving cars, digital assistants, robotic factory workers, and smart cities have all demonstrated that intelligent robots are viable in recent years. AI has altered almost every industry sector, including retail, manufacturing, finance, healthcare, and media, and it is still expanding.

The Future of Machine Learning

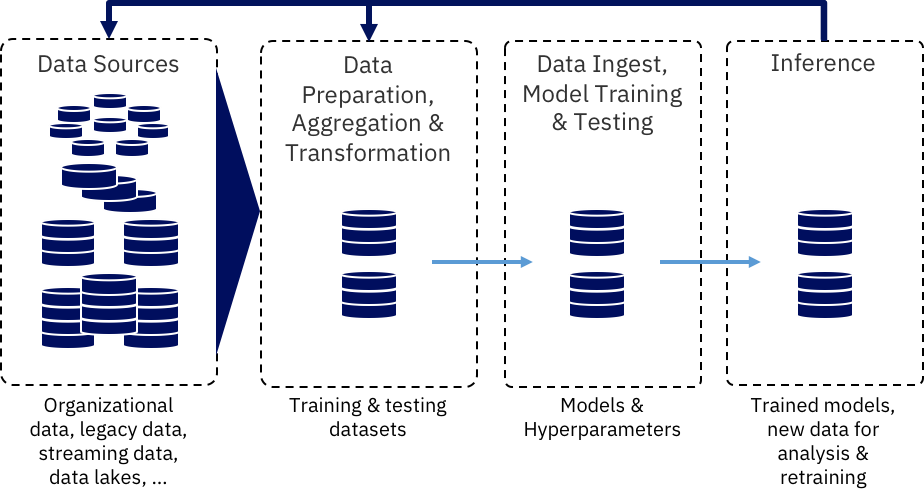

Based on current technology and developments we can assume that all AI systems, large or small, will include some form of machine learning.As machine learning becomes more important in corporate applications, it is likely that this technology will be delivered as a Cloud-based service known as Machine Learning-as-a-Service (MLaaS).Connected AI systems will allow ML algorithms to actively learn from new emerging data in the internet.Hardware suppliers will be rushing to increase CPU power to handle ML data processing. Hardware vendors will be driven to alter their computers to better accommodate the capabilities of machine learning.

Some Predictions about Machine Learning

- Multiple Technologies in Machine Learning: In many ways, the Internet of Things has benefited Machine Learning. Variousalgorithms are now being used in machine learning to improve learning capabilities and collaborative learning using multiple algorithms is likely in the future.

- ML Developers will have access to APIs to develop and deploy “smart applications” in a personalised computing environment. This resembles “assisted programming” in someways. Developers may simply integrate facial, speech, and visual recognition features into their applications using these API kits.

- Quantum computing will dramatically improve the speed with which machine learning algorithms in high-dimensional vector processing are executed. This will be the next major breakthrough in machine learning research.

- Future advancement in “unsupervised ML algorithms” will lead to higher business outcomes.

- Tuned Recommendation Engines: In the future, ML-enabled services will be more accurate and relevant. Recommendation Engines in the future, for example, will be significantly more relevant and tailored to a user’s unique likes and tastes.

Will AI and ML impact the cyber security industry?

According to current AI and ML research trends, advances in cyber-security have advanced ML algorithms to the next level of learning, implying that future security-centric AI and ML applications will be distinguished by their speed and accuracy. Machine Learning, Artificial Intelligence, and the Future of Cyber Security contains the complete story. This emerging practise could help Data Scientists and cyber security specialists work together to achieve common software development goals.

Benefiting Humanity: AI and ML in Core Industry Sectors

It’s difficult to overlook the global impact of “AI Washing” in today’s commercial market, as well as how AI and machine learning may transform application development markets in the future.

AI and machine learning have often been compared to the discovery of electricity at the beginning of the Industrial Revolution. These cutting-edge technologies, like electricity, have ushered in a new age in information technology history.

Today, AI and machine learning-powered platforms are transforming the way businesses are conducted across all industries. These cutting-edge technologies are progressively bringing about dramatic changes in a variety of industries, including the following:

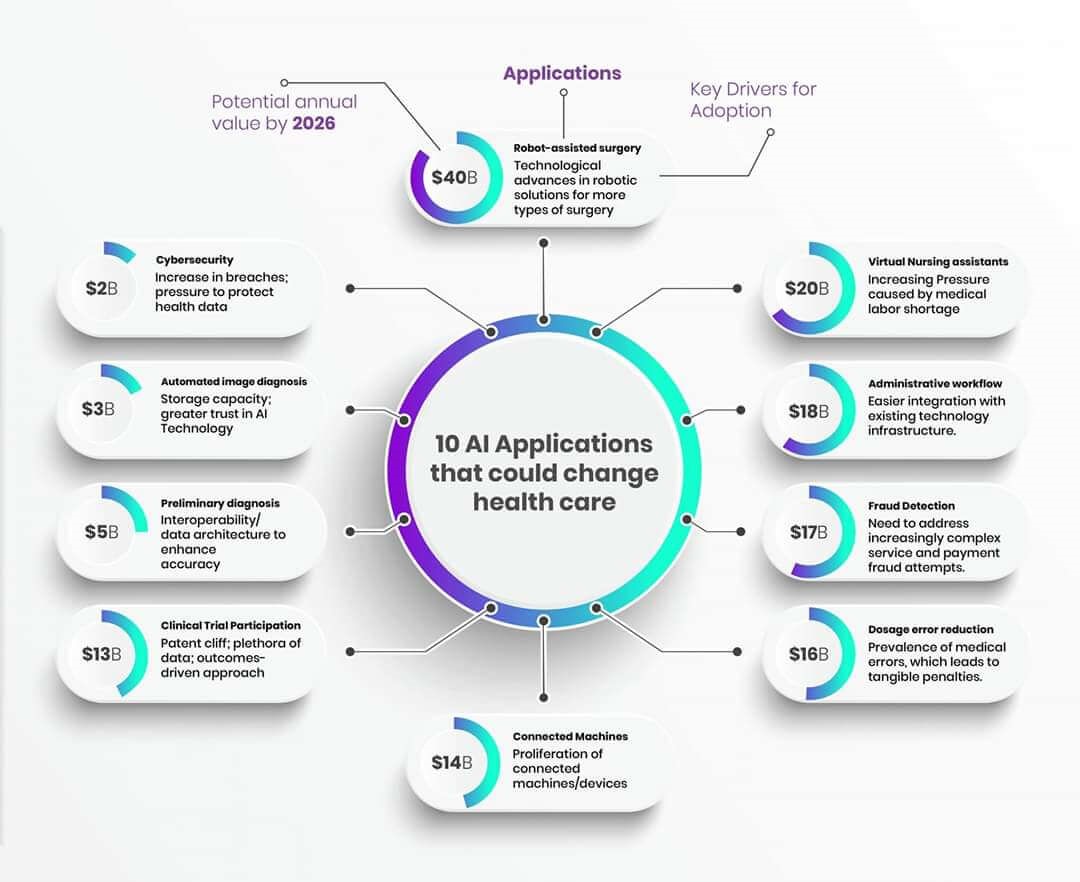

- Healthcare:Human practitioners and robots will gradually work together to improve outcomes. Smart AI enabled equipment would be expected to provide faster and accurate diagnoses of patient ailments, allowing practitioners to attend more number of patients.

- Financial Services : The article AI and Machine Learning are the New Future Technology Trends looks at how new technologies such as blockchain are affecting India’s capital markets. Capital-market operators, for example, can use blockchain to forecast market movements and detect fraud. AI technologies not only open up new business models in the financial sector, but they also strengthen the position of AI technologists in the business-investment ecosystem.

- Real Estate : Contactually.com, an innovative CRM system for the real estate industry, was created exclusively to link investors with entrepreneurs in Washington, DC. Computer Learning algorithms add to the power of the static system, transforming it into a live, interactive machine that listens, approves, and suggests.

- Administration of Databases : AI technology can automate procedures and duties in a typical DBA system because of the repeated tasks. Nowadays DBA is equipped with modern AI based algorithms so that they may make value-added contributions to their organisations rather than just executing routine jobs.

- Personal Devices : According to several analysts, AI represents a game changer for the personal device sector. By 2020, AI-enabled Cloud platforms will be used by around 60% of personal-device technology manufacturers to supply better functionality and personalised services. Artificial intelligence will provide an “emotional user experience.”