Physics, while continuing its journey to unravel the mystery of nature since ages, has become one of the most powerful enablers of innovation and discovery through remarkable advancement in the field of academia and industry. Much technological advancement have taken place through curiosity driven research in fundamental areas of Physics, leading to tremendous social and economic benefits and thereby carrying a deep impact on our daily lives. One such significant contribution of Physics is towards the flourishing advancement of Medical Science.

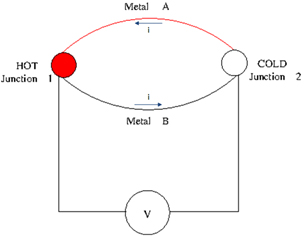

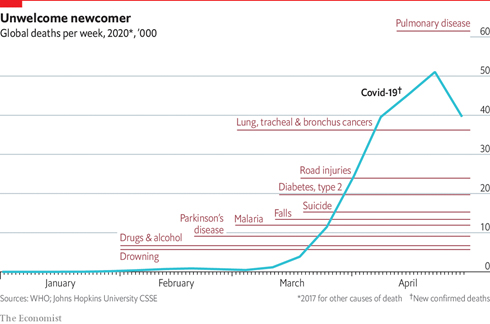

Fig.1.: The Global Death toll in 2020 (source: https://www.economist.com/graphic-detail/2020/05/01/covid-19-has-become-one-of-the-biggest-killers-of-2020)

Cancer, arguably one of the biggest curse of human civilization, kills millions of people all over the world every year. Only in US, 1.8 million cases are estimated as newly diagnosed in 2020. This year, as per the report from World Health Organization (WHO), apart from COVID-19 and Pulmonary diseases, Cancer is still one of the biggest killer of human lives.

Cancer indicates a disease caused by uncontrolled cell division. The rapid cell division usually produces a tumor, which then spreads and destroys the surrounding tissues. Research on modern Physics plays a crucial role in improving both diagnosis techniques and treatment of Cancer by developing various imaging techniques, which allows us to have a vision beyond the obvious. Innovative methods based on different types of radiation allow the medical physicists not only to detect cancerous tissues but also to kill cancer cells in a controlled and safe manner. Understanding, generating and manipulating that radiation has been made possible by research in the fundamental areas of Physics, like Cosmology, Astrophysics, Nuclear physics, Particle physics etc. Many of the recent advances in cancer therapy are the outcome of the development of novel radiation detectors (which act as imaging cameras) and other instruments originally designed for physics research, combined with advanced software.

Different Imaging Methods used to detect Cancer

- Computed Tomography (CT) Scan:

In 1963, physicist Allan Cormack first devises a methodology for computed tomography scanning using rotating X rays. In 1971, Godfrey Hounsfield at EMI Laboratory developed the scanner to reconstruct internal anatomy from multiple X-ray images taken around the body. Cormack and Hounsfield jointly were awarded the Nobel prize in 1979 for their unmatched contribution in the field of Medicine.

- Positron Emission Tomography (PET) Scan:

Another well known imaging technique is Positron Emission Tomography (PET) Scan. The first PET image was taken by biophysicist Michael Phelps and nuclear chemist Edward Hoffman in 1973.

This relies on a particular type of radioactive decay in which positrons, the anti-particle of electrons are being emitted. A positron emitting isotope is attached to a bioactive material and then it is injected into the body and finally gets accumulated in the targeted cells. The positrons emitted by the isotope get annihilated with the nearby electrons, which are commonly known as Pair Annihilation process. As mass is being destroyed so following the famous relation , energy is created and it is emitted in the form of photons (i.e., light), which is then detected by the imaging cameras and used to construct a three-dimensional image.

- Single Photon Emission Computed Tomography (SPECT) Scan:

Single photon emission computed tomography (SPECT) scanning is a non-invasive nuclear imaging technique, frequently used in Oncology to examine the blood flow to tissues and organs. In this method, radioactive tracers are injected into the blood to generate images of blood flow to major organs, like brain and heart. The tracers generate gamma-rays, which are detected by a gamma camera, thereby creating 3D images with the help of computerized methods. In this case the tracer remains in the blood stream rather than being absorbed by the surrounding tissues, and thus limiting the images to areas where the blood flows.

- Magnetic Resonance Imaging (MRI):

In 1980, another breakthrough imaging techniques was reported, which was called Magnetic Resonance Imaging (MRI), which relies on the fact that a magnetic moment aligns itself in the direction of the externally applied magnetic field.

Fig.2: MRI Scanner (source: https://www.cancerresearchuk.org/about-cancer/cancer-in-general/tests/mri-scan)

When a human body is placed in a strong magnetic field, the free hydrogen nuclei associated with the water molecules present in the body align themselves along that field direction. Usually a uniform magnetic field of strength ∼ 1-3 Tesla is applied in MRI. Next, a radio-frequency pulse is applied in perpendicular direction to the static magnetic field which tilts the magnetic moments associated with the water molecules away from the magnetic field direction. Then when the RF signal is withdrawn, the magnetic moments realign themselves in the initial direction and thereby relaxing through radiating a radio frequency signal of their own which is again detected by a conductive field coil placed around the body. The detected signal is then reconstructed to obtain 3D grey-scale images.

MRI technique offer much better resolution of soft tissue images compared to X-ray images. The relaxation time, as well as radiated energy from magnetic moments, depend on the environment and the chemical nature of the molecules. Physicians can differentiate between various types of tissues based on these magnetic properties. The faster the protons realign, the brighter the image. In 2003, Peter Mansfield, from University of Nottingham got the Nobel Prize in Medicine, shared with Paul Lauterbur from University of Illinois, for their “discoveries concerning magnetic resonance imaging”.

- Optical Coherence Tomography (OCT):

Optical coherence tomography (OCT) is a form of “optical ultrasound” where, Infra-red laser light is made to fall on the skin and the light that is reflected back from the tissue layers just beneath the surface can be collected to form a very high resolution image. Although OCT images contain more detailed information than MRI, but it can only penetrate a few millimeter, which makes it useful for detecting cancer of the skin and esophagus mostly, for example.

- Selected Ion Flow Tube mass spectrometry (SIFT):

Selected ion flow tube mass spectrometry is a technique, developed by a group of astrophysicists at the University of Birmingham while investigating the chemistry of interstellar clouds. The technique allows to sense tiny amounts of gas, and can be used to detect certain cancers by analyzing a sample of a patient’s breath.

Physicists are also working to develop imaging techniques with low energy non-ionizing THz radiation which can penetrate few millimeters of human tissues with low water content, and thereby making it an ideal candidate for probing breast and skin cancer at a very early stage. The first THz cameras were developed by astrophysicists to image the distant universe.

Treatments of Cancer using the concepts of Physics

- Radiotherapy:

Radiotherapy is of the most effective treatment to treat Cancer. In this technique, a high energy radiation which includes not only X rays but also high energy particle beams like electrons, protons, etc., is selectively deposited in the cancerous cells and if the dose is right, the radiation can kill the cell, by breaking the DNA strands in the cell nuclei. Modern proton beam therapy is the most precise form of radiation treatment available today. It destroys a the tumor selectively, leaving the surrounding healthy tissues and organs almost completely unaffected.

Fig.3.: Radiation Therapy (source: https://onco.com/about-cancer/cancer-types/bone-cancer/treatment/radiation-therapy/)

- Brachytherapy

Brachytherapy, another well known treatment of cancer patients, where artificially produced radioactive “seeds” are enclosed inside protective capsules, and then delivered to the tumor, where they emit beta or gamma rays, to provide a highly localized dose.

- Boron neutron capture therapy

Boron neutron capture therapy is used to treat cancers of mostly head and neck. A non-radioactive form of boron is injected into the cancer cells and then a beam of neutrons is incident on it. Boron absorbs the neutrons much more readily than human tissue, and by doing so it forms lithium ions and high-energy alpha particles, which together deliver the correct radiation dose to the tumor.

With all these therapies people are trying to decrease the death toll caused by this deadly disease. Today with all these diseases (Covid19, Cancer etc.) around us, let us question ourselves, are we advanced enough to protect ourselves? Or our vulnerabilities persist helplessly in front of nature? Anyway, the journey of Science will continue in an unstoppable manner to fight against all odds for the betterment of human kind and a deeper understanding of nature.